MCP Servers: Integrating LLM in E-Commerce Systems

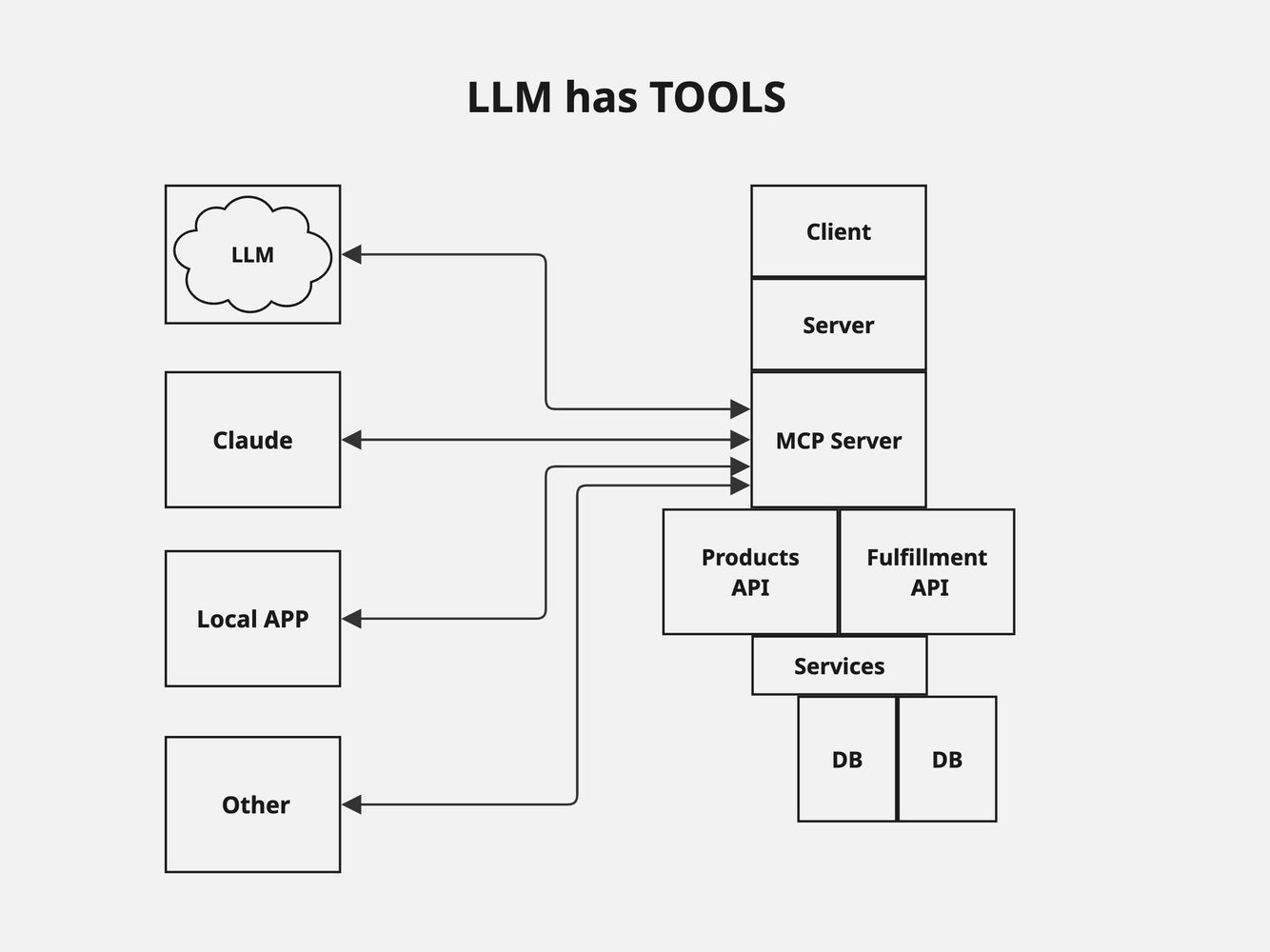

LLM is NOT aware | LLM is AWARE | LLM has TOOLS |

Large Language Models (LLMs) are reshaping how we think about user interactions, especially in commerce. This article explores how to move beyond basic chatbot implementations and toward a more integrated, architecture-aware approach. My focus is on enabling LLMs to reason over business logic, interact with internal systems through well-defined APIs, and participate as collaborative agents in e-commerce ecosystems. I walk through the mindset and the building blocks needed to design architectures where LLMs are not just responders but active nodes within the business flow—tools-aware, API-driven, and permission-controlled.

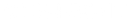

In a traditional e-commerce architecture, systems operate in isolation, driven by rule-based logic. Users interact with a frontend, which sends requests to backend services. Those services hit APIs or databases and return static data. If I were to drop an LLM into that flow without any enhancements, it would behave like a fancy chatbot—responding to inputs based on static prompts or pretraining, without any actual context or live data.

In this disconnected mode, the LLM can’t:

- Understand user intent dynamically.

- Autonomously trigger business logic.

- Interface with existing APIs like

search_products()orcheck_availability(). - Maintain contextual awareness of fulfillment pipelines or product state.

It's limited to surface-level interaction and lacks the hooks to become operationally useful.

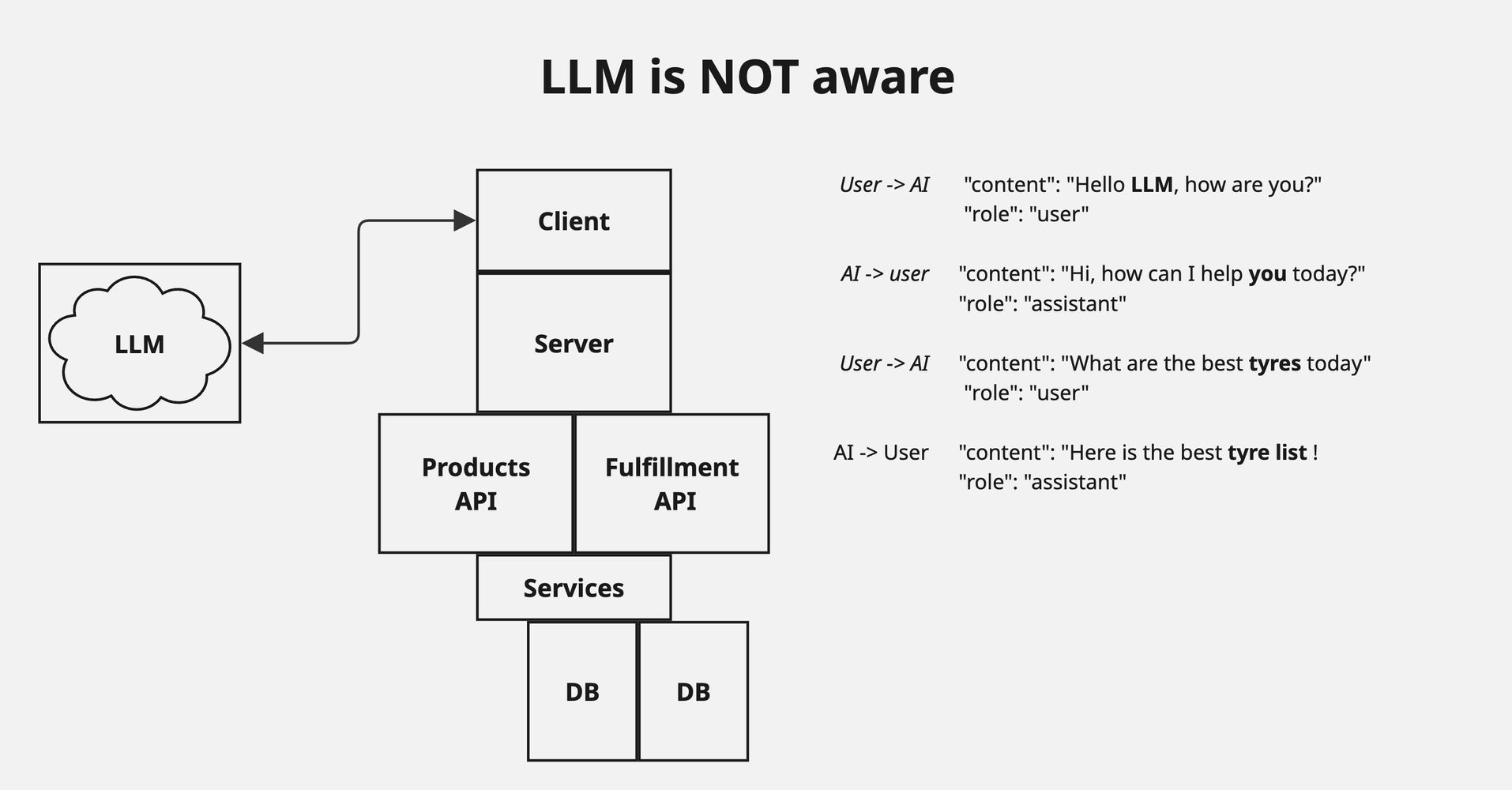

How the flow works

- User sends a message – Example: “What are the best tyres today?”

- Server sends the message to the LLM – Including current context and available tool definitions.

- LLM identifies it needs real data – It decides to call a function like

get_tyres. - Server handles the function call – It queries our APIs and structures the response for the LLM.

- LLM replies to the user – Using natural language and up-to-date information.

This establishes a feedback loop where the LLM can interact with backend systems like any other frontend component—but conversationally.

To make the LLM aware, I introduce a middleware layer that acts as a bridge between the LLM and business infrastructure. This layer interprets LLM intent as structured requests and translates those into real API calls.

The architecture allows the LLM to:

- Parse user queries into actionable commands.

- Request tool invocations securely.

- Call services such as

get_tyres,check_stock, ortrack_order. - Receive real-time responses from microservices.

- Construct informed replies based on actual product and business data.

So when someone asks, “What are the best tyres today?”, the LLM isn’t guessing—it parses intent, calls the product API, interprets the response, and explains its suggestions contextually. At this point, the LLM isn’t a chatbot anymore. It’s a reasoning agent embedded within my infrastructure.

Beyond awareness, LLMs become powerful when we give them structured tools. A tool can be any callable function or API that helps the LLM fulfill a user request.

To architect this:

- I design a Tool Invocation Protocol, where each tool is registered with metadata such as name, description, inputs, and expected outputs.

- The MCP Server (Middleware Command Processor) acts as a broker between the LLM and microservices.

- Tools can include:

search_productscheck_discountrecommend_bundleplace_ordertrack_shipment

But it’s not limited to product APIs. We can expose fulfillment APIs, payment processors, and even automate placing orders directly from the chat—assuming secure access to the user’s profile and session data. If the path is authenticated and authorized, the LLM can assist with tasks like:

- Ordering a recommended product on behalf of the user.

- Selecting a preferred delivery method based on historical behavior.

- Tracking fulfillment and triggering updates via chat.

This enables a fully personalized experience, where the assistant isn’t just informative—it’s operational. Multiple LLMs (Claude, local LLMs, etc.) or even other apps can access this MCP layer.

This abstraction means:

- All tools are reusable across assistants.

- Tool permissions and rate limits can be enforced centrally.

- The business logic stays secure, and the LLM can only access what it is allowed to.

By integrating tools, the LLM is no longer just a responder. It is a decision-making node in the business architecture—interfacing seamlessly with APIs, services, and databases.

I see the future of e-commerce as one where LLMs move from automation to orchestration. The value isn’t in simulating intelligence—it’s in designing systems where intelligence can operate safely and productively. With proper awareness, tools, and middleware, LLMs can: Understand user context, Trigger real business actions and Learn from structured feedback loops. This architecture isn’t speculative—it’s already taking shape across AI-first commerce platforms. My job is to make sure the LLM doesn’t just talk—but acts, collaborates, and contributes.

Bonus: Next Steps

As we push LLM integration further, several enhancements are on the roadmap to bring even more value to the user experience:

- Memory and Personalization – I’m exploring mechanisms to let the LLM remember user preferences, purchase history, and behavioral context across sessions. Done securely, this turns each interaction into a continuation of the last, not a cold start.

- Voice Support – Integrating voice capabilities will transform the assistant into a voice-native shopping companion—fast, hands-free, and natural.

- Multilingual Capabilities – By leveraging multilingual embeddings and translation-aware logic, the assistant will be able to serve users in their preferred language with fluency and nuance.

These next steps are not speculative—they are architectural extensions that follow naturally from a properly integrated LLM stack. With the right guardrails, they unlock a future of highly personalized, multi-modal, AI-first commerce.

MCP Servers: Risks of Using in Claude Desktop

Understand the core security, privacy, and operational risks of enabling custom MCP integrations in Claude Desktop on macOS or Windows.

From Zero to Hero: Scaling up to million users

System that supports millions of users is challenging, and it is a journey that requires continuous refinement and endless improvement.

MCP Servers: Risks of Using in Claude Desktop

Understand the core security, privacy, and operational risks of enabling custom MCP integrations in Claude Desktop on macOS or Windows.

From Zero to Hero: Scaling up to million users

System that supports millions of users is challenging, and it is a journey that requires continuous refinement and endless improvement.